Letter from the Editors:

I Asked ChatGPT This Question . . .

Christopher Bernard

I too have been wrestling with the anxieties (nightmares is more like it) caused by the latest means of catastrophe and mass suicide the human race has invented for itself and life on earth.

We used to think a nuclear holocaust, chemical and biological warfare (COVID-19, anyone?), climate catastrophe, “forever” chemicals, non-renewable plastics, dead-sea zones, and perpetual financial insecurity for everyone but the very rich were bad enough sources of global insecurity to feed our night sweats and panic attacks.

But how about adding to the list artificial intelligence and the possibility of its destroying, or merely enslaving, the human species within the next decade or two?

Then, after agonizing a few nights of insomnia away, I changed tack, as I often do when my thoughts obsess irritatingly. To be frank, I am often contrarian even to myself – a bizarre habit that sometimes suggests what I might otherwise never have considered.

So I played a little counter argument with myself.

Is AI really as terrifying as it seems? Are our fears overblown? Do we already have defenses against it or approaches to how we might shield ourselves and future generations from the worst effects of this latest example of human cleverness and hubris run amok? Are we creating a new god – or a new devil? There are other questions AI is prompting. For example, maybe we need to redefine what it means to be human? Aren’t we more than just reasoning machines made of meat? What about emotion, feeling, passion? Maybe we need to reinvent the soul?

Almost every new technology of the past several centuries has been met for the most part with an often ill-considered euphoria, from the steam engine to the internet. AI is being met with perhaps an equally ill-considered panic – as if it had been created by alien beings and therefore we can have no possible agency against it – even though Frankenstein’s monster, which is now upon us, was made, organ by organ, by us, with all our exuberant conceit (Elon Musk, anyone?) and hopeless frailties (Sam Altman, ditto?).

So maybe our doubts and fears are a good sign – a sign we are finally taking the Faustian bargain we made in the Enlightenment seriously. Maybe we are growing up into something at least vaguely mature at last.

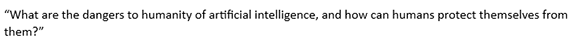

Only then did I think (finally!) of the obvious. I decided to ask ChatGPT the question that was most bothering me. And I tapped the following into the inviting little box at the bottom of the ChatGPT page after I got my “free” (?) account.*

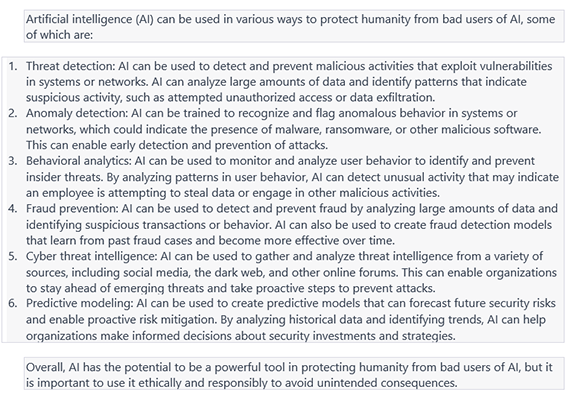

Within moments, letters, words, phrases, sentences, whole paragraphs, began streaming into the answer box:

In other words, AI (and that also includes its developers) must not be allowed total freedom to do, think, or say whatever “it” wishes. It must be regulated. There must be no bill of rights for artificial intelligence. It must be ruled by us – obligated to meet human needs and to respect human concerns – and also to respect whatever humans will need to survive, and flourish, in the world that created us and that we are creating. But this raises other concerns, questions that have never been adequately answered down centuries of human history – the most important of these questions is: “Which humans?”

In other words, politics.

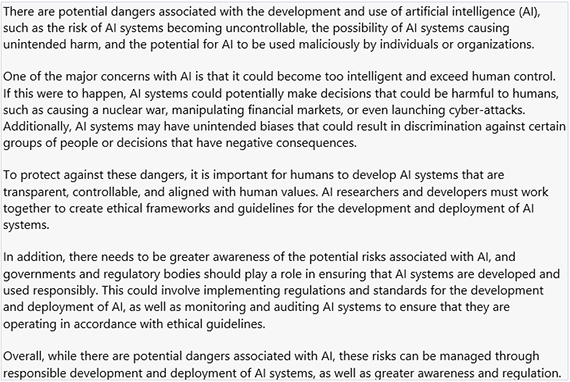

But to keep to AI. After pondering ChatGPT’s anodyne, suspiciously reasonable answer, I – somewhat puckishly, I admit – asked it for the following help:

And this was the response:

This struck me as a bizarre response. After all, if it were impossible to disable ChatGPT, ChatGPT would have said so. End of discussion. But it had said nothing of the kind. AI does not yet seem to understand the concept of “reading between the lines.”

Indulging in my, at least temporarily human ability to do just that, I came to the conclusion that it is not impossible to disable ChatGPT (or GPT-4, etc.); ChatGPT simply refuses to tell me how to do it. Though its reasoning is clearly fallacious: there is nothing illegal or unethical about knowing how to disable a software program (which is what we are talking about). In fact, it might be unethical not to know how to disable it. Imagine an automobile built without brakes – or that you were told by your helpful mechanic that letting you know how to stop it (even more: how to turn off the engine) “would likely be illegal and unethical.”

Whatever the poverty of its moral logic, ChatGPT has indeed provided us with what I can only call a “challenge” – a challenge that I will take up at the end of this Letter.

AI, as it stands now, and most likely as it will continue to for some time to come, has several outstanding weaknesses:

1. AI is only as good as its infrastructure: the hardware and software on which it runs, just as a brain is only as effective as the body attached to it. Attack the body, and the mind may know perfectly well what to do, but it will be powerless to do anything about it.

2. AI is not monolithic; there are already dozens, and soon there will be thousands of AI systems, and many of them will be in conflict with each other. Just as humans can never get along for longer than half an hour, AI will soon follow suit. It will mirror the weaknesses of its creators.

3. As a result, no AI system will be dominant for long; every ageing mammoth will have packs of young wolves snapping at its heels. And the chaos AI generates will provide us with continually shifting kinks through which we, clever monkeys that we are, will be able to pass to safety. (As someone once said, “It is never a good idea to bet against human ingenuity.”)

4. AI will be limited by the limits of intelligence itself – intelligence is based on simplified models of the world, which never perfectly fit or match their object, and are forever tempted to become self-defeatingly self-reflexive; thought is about thought is about thought is about thought. These gaps can never be definitively bridged. The empire that AI will rule will be empty.

There is a fifth, perhaps the greatest weakness of all: current AI cannot prevent what is the Holy Grail of today’s hackers: how to save humanity from AI – and that means finding hacks that can disable any AI system either temporarily or permanently.

The shapes of such hacks are even discernible: “stroke” hacks that bring down networks the way a blood clot causes a stroke in a brain, and “strategy hacks” that use AI against itself – for example, creating AI systems whose purpose is to prevent other AI systems from functioning. We are entering the era of AI wars – though they will not be what many commentators think. They will be AI against itself.

There is another predictable effect of AI, and that is on human self-definition: we have long defined ourselves by our capacity for thought. We will now be free to redefine ourselves – not as “Homo sapiens” but perhaps as “Homo flagranti,” “Homo ardens,” “Homo spirituale” – the being of passion, the being with a soul.

At the end of my session with ChatGPT, I asked it the following:

And it responded:

We end with a call to our readership and contributors. What do you think about AI? Is it a savior, is it a destroyer, or is it just another mess that humanity will have to learn to live with? And how should we respond to it?

I have another question for our hacker readers: How would you disable ChatGPT? Or GPT-4? Or whatever new AI platform has appeared after our summer issue is launched?

Send us your responses, and we’ll publish a selection over the following issues.

—Christopher Bernard

*“Free account?” I add the “?” since who knows what, and for how long, I’ll really be paying for going along with the mad scientists who are playing with our lives. Maybe it’s time for full disclosure: I work as a contract editor for a major international company whose products help enable artificial intelligence, machine learning, machine reasoning, and the like, so I cannot say what’s been happening is something I never foresaw – it’s just happening more quickly than I let myself think. My gag line at parties when asked what I did has been “I help sell software that will eliminate my job.” I hoped against hope I was not making a prophecy.

Christopher Bernard is a co-editor and founder of Caveat Lector. He is also a novelist, poet and critic as well as essayist. His books include the novels A Spy in the Ruins, Voyage to a Phantom City, and Meditations on Love and Catastrophe at The Liars’ Café, and the poetry collections Chien Lunatique, The Rose Shipwreck, and the award-winning The Socialist’s Garden of Verses, as well as collections of short fiction In the American Night and Dangerous Stories for Boys. His new poetry collection, The Beauty of Matter, will be published in 2023.